improved-word-alt-text

Web page for final project, Improved Word Alt Text, completed as part of The University of Washington course "The Future of Access Technology"

View the Project on GitHub thenorthwes/improved-word-alt-text

Improved Word Alt Text

This project’s goal is to develop a Word plug-in which modifies the default behavior in Microsoft word when an image is inserted such that the user is prompted with a dialog box that guides them to create alt text. The goal is to raise alt text awareness so it is not forgotten in a hidden menu; and suggest best practices so that alt text is high quality and contextually relevant to the image’s intended use.

Video

Introduction: Present the promise/ obstacle/ solution for your project— What is the problem you are solving and why is it important to solve it?

Missing and/or bad alternative text for images in Office documents pose a significant challenge to users with visual impairments. Adding alt text to images is usually an afterthought for most content creators and with better and more proactive tooling, content creators will be reminded and encouraged to write better alt text more frequently thereby increasing the amount of well-described images in Office documents. Initially we were focused on creating an add-in for MS PowerPoint but the PowerPoint JS API isn’t as robust and so we pivoted to making an add-in which will work for MS Word.

The current approach to alt text in Office has 2 main problems we aim to solve with our plugin. Primarily the alt text UI is not proactive and it requires the user to manually search for it or manually use the accessibility checker. This is likely in favor of auto-opening the Design Ideas feature (in PPT) and to avoid annoying users, as accessibility does not have the importance and awareness for most users that it should. Another issue we aim to solve is that the alt text UI either lets the user do all the writing with minimal guidance, or generates a totally automated caption, regardless of context of document. Either the latest research around how to write good alt text just hasn’t made its way into the user interface yet, or they wanted to keep the guidance as simple as possible. We aim to improve the prompt about writing good alt text by reccomending best practices from reputable best practices we identify.

Our solution to overcome these obstacles is to build an Office add-in that users can add to their installation of Office in the web and their installed product. The add-in would work as a replacement for the existing Alt text UI.When a user adds an image to their document, the add-in would automatically open the task pane and prompt them to add alt text for the image. The add-in would guide the user to writing good alt text based on the contents and context of the image, using category-based guidance (people, chart/figure, landscape, etc.). The user can expand their chosen category and read detailed guidance in the pane. This will improve upon the current experience, which provides static guidance for every image.

Related Work: Talk about relevant work that closely connects with your project.

One of our sources, “Person, Shoes, Tree. Is the Person Naked?” What People with Vision Impairments Want in Image Descriptions, was immensely helpful for motivating our project. Their findings revealed how image description preferences vary based on the source where digital images are encountered and the surrounding context. They interviewed blind and low-vision participants about their experiences and preferences about digital images in different sources. Most relevant to our work, they found that participants reported low engagement with image descriptions in productivity applications due to the fact that they were frequently missing, particularly in Word and PowerPoint documents. They also found that participants wanted image descriptions that were highly context-sensitive to the contents of the document, to give the alt text users as close of a representation to the sighted experience as possible.

Another of our sources, Understanding Blind People’s Experiences with Computer-Generated Captions of Social Media Images helped inform our perspective on the auto-generation of image descriptions done by Word and PowerPoint when the user inserts an image that is missing alt text. They investigated the trust that blind and low vision users place in computer-generated captions of social media images based on phrasing. They focused on Twitter and found that participants placed a great deal of trust in the auto-generated captions, often resolving differences between the tweet text and an incongruous caption by filling in imagined details. They concluded that users are trusting even of incorrect AI-generated captions and encouraged questioning of the accuracy of AI captioning systems. This helped inform our perspective that the auto-captioning done by Office applications, which were not very accurate in our testing, should not be relied upon by users to provide accurate image descriptions. Furthermore, since these auto-generated captions do not take the context of the documents into account, they will necessarily not be good image descriptions according to the perspective of users in the source mentioned above.

Finally, the paper “It’s Complicated”: Negotiating Accessibility and (Mis)Representation in Image Descriptions of Race, Gender, and Disability helped inform our add-in’s guidance on how to write good image descriptions with people in them. The paper motivated the importance of not making assumptions about people’s gender, race, disability, and other identifying characteristics based on an image alone, and not describing them in the alt text of an image unless they have the photographed person’s consent. This is one of the emerging spaces of respectful dialogue that we really felt was not well captured by out-of-the-box alt text guidance in Office applications, which does not touch at all on how to describe people. The guidance provided by this paper on how to describe people respectfully was very helpful in guiding our implementation.

Solution: What did you do in your project- what did you design or implement?

We built an Office add-in for Word that users can add to their installation of Word to help them write better alt text, more consistently. The add-in technically supplements, but would functionally replace, the existing UI for adding alt text to an image. It has a couple of key features:

- When a user adds an image to their document, if that image does not have alt text, then when the user selects that image, the add-in automatically prompts them to add alt text for the image. This is done through a dialog pop-up. (We intended for this functionality to work such that when the user inserted the image the task pane for our add-in automatically opened to prompt them to add the alt text, but this functionality proved impossible to troubleshoot and achieve in the time available.)

- The add-in guides the user toward writing better alt text based on the contents and context of the image, using category-based guidance (people, chart/figure, etc.). The user answers a series of questions pertaining to their image for each category and receives tailored suggestions for writing clear, useful, and respectful alt text. This improves upon the default experience, which provides minimal, static guidance for every image.

- Finally, if the user’s provided alt text does not align very closely with the surrounding text of their document, the add-in will suggest that the alt text doesn’t appear to be very relevant to the context and prompt the user to reevaluate it. This is implemented via a threshold on the text similarity of the alt text and the surrounding text context. The ‘semantic’ text similarity is determined by using the Universal Sentence Encoder model which was reported to provide good results in this type of tasks.

By helping content creators remember to add informative alt text, hopefully we can improve the experience of blind and low-vision users reading them.

Validation: What did you validate/what was your validation approach? What were the results of your validation?

We approached our project with two intentions of validation. First we were trying to validate if this JS plug was this technically feasible and does it smoothly function within Office products. As mentioned, we wanted to develop this for PowerPoint, however the add-in API to image descriptions did not seem to be working as intended, to verify this was feasible we initially we approached the Office developers in two ways: first, after reading the documentation, it seemed that this is supported, but in practice it does not work, so we opened them an isuse on the official office-js github repo which to this date remains assigned but unanswered. Second, we posted a question in StackOverflow their official tag (office-js), we recieved an answer eventually by a developer in PowerPoint, confirming this is not supported. Due to the uncertainity and delayed answers, we prematurely pivoted to Word as it was explained earlier, here we based our work on the documented APIs to retrieve/modify image descriptions and to attach handlers upon user events, which validated this add-in was feasible to build based on the active selected element (rather than automatically prompting on created images).

The second validation we worked on was validation of our premise. Does more information about alt text best practices improve a content creator’s image descriptions. Although inuitively true we wanted to be see what would change for ourselves. We developed two Google forms with 3 images. The first form just asked for image descriptions (alt text). The second form included the object-action-context mantra and shared the ‘intent’ of the image in the hypothetical content. We sent this out to our class, asking those with even / odd birthday dates to go to form A/B. We did talk about how our population wasn’t refelctive of a real world population since it was made up of students who have recently studied accessibility technology and best practices.

Our results weren’t ideal, 10 people responded to the contextless form while we only received 2 to our form with tips. However, a few interesting items are noticable.

- For a chart we included, without context descriptions ranged widely from simple description of the title to explanations of the time without any data to fairly relvevant but verbose descriptions. When we gave context about the key takeaway, we got very common alt text description. We feel this shows the importance of a content creator stopping to consider the context of the image. We also noted that when we suggested the key takeaway for the chart, on average our responses were 10 words shorter.

- For the 2nd image, of a child sledding on snow. We got further validation that providing context creates much more consistent & common descriptions

- Our two responses in the B form also were shorter, which from our studying & reading seems to be preferred in many contexts.

- Overall our population of respondants didn’t seem to consistently use gendered language one way or the other between the two forms. In the second form we did note if gender was known or not important. However many respondents in the A form who were given no gender information still refrained from assumptions. We attribute this mostly to our unique population.

Overall, we do feel that our limited results show that considering context, and thus best practices, has a positive net impact on image description quality. But much more scientific work should be done to validate. The biggest noticable area of change was the chart description, suggesting charts may be the most hard to describe without considering context and intended takeaway.

Add-in limitations

There are two areas with possible shortcomings on the final implementation. The first and more noticeable is the amount of categories, we included four categories: decorative, image with people, chart; keeping in mind that decorative images are ubiquitous on any type of document, likewise image with people, and charts are dominant in formal documents and presentations. However, this is by no means an extensive list, given an image of a less general domain, there is not sufficient guidance to write a good alt text based on the image contents. Future work includes increasing the amount of categories, possibly adding sub-categories for some domains.

Second, the problem of determining if the image description is a good semantical description of the surrounding text and if it conveys the intent the image is trying to describe is a complex and a somewhat ill-defined task (since it is relative to the author’s intentions). A reasonable approximation is determining how related are the description with the text context, which on itself is a complex task as well. Here are two examples where our add-in fails on this task:

Example 1

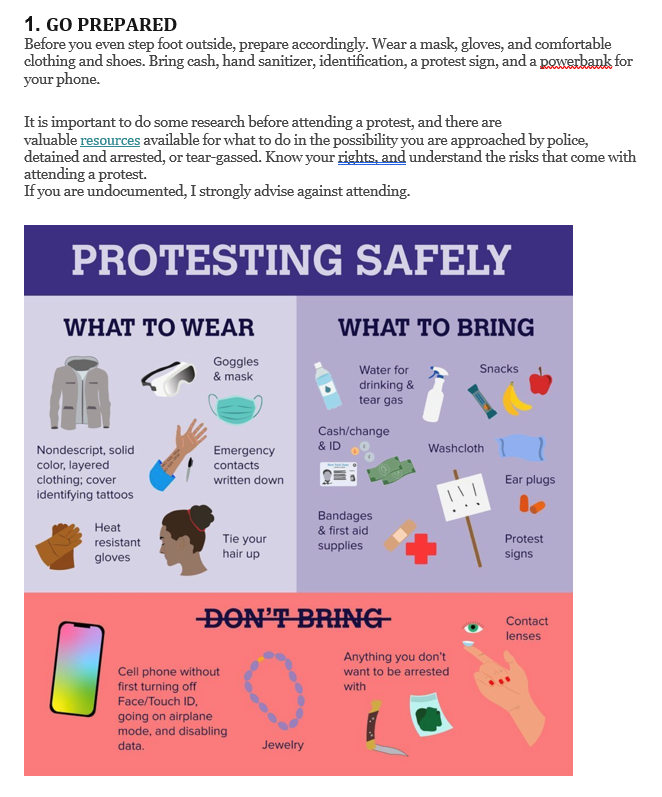

The following example is an screenshot of this article transcribed to word. It contains an image with icons about what to wear, bring and do not bring to a protest. The description talks about how to go prepapared to a protest and what risks to expect when attending to one.

Trying the following captions showcase the limitation of the add-in:

- “goggles, mask, gloves, cash.”: this description is a mere transcription of some of the icons in the image (something that an automated service could generate). In this case, the add-in does not alert anything. This is not flagged because the same disconnected words are scattered in the description. However, in this case is important to detail all the icons in the image, list and call out which ones to bring and not to bring.

- “An image of a protest”: again, here the model is likely just taking advantage of the fact that ‘protest’ exists on the document multiple times. However, this is neither a description of the image (superficial or not), or a good alt text.

Example 2

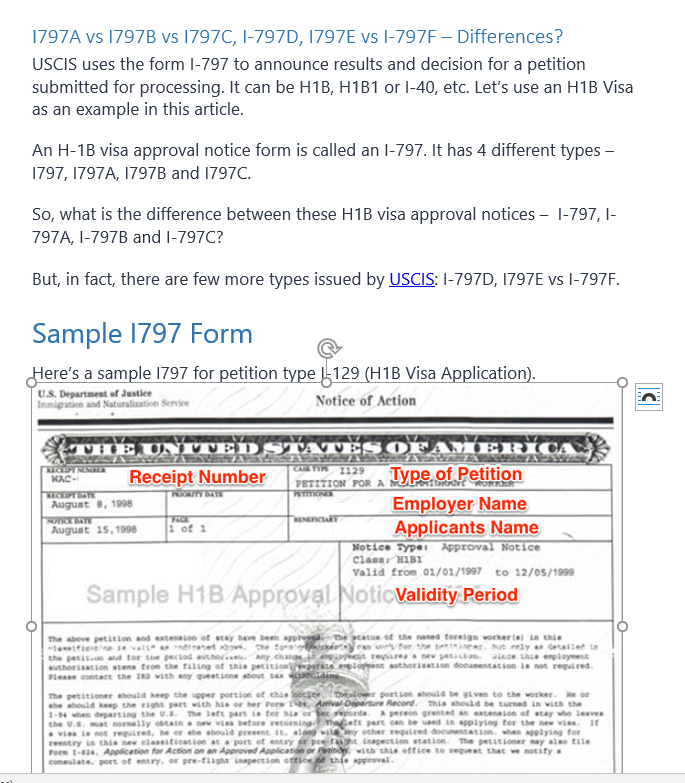

The following example is an screenshot of this article transcribed and editted in word. It contains an image with a screenshot of the I-797 form, and a description of different types of visa approval notices with a brief point about how USCIS uses these forms.

The caption “Work permission image” is flagged as not being relevant with the surrounding context. This is clearly not the case, since that is the literal description of the image, and is important to the context of the article. Here what is probably happening is that the lingo of the context is too domain specific (US inmigration paperwork and terminology), which can be absent in the training data of the model used to compare the alt text and the context.

These two examples showcase two key problems of these types of models in general: robustness and domain shifting. This is why the approach in this add-in is a rather ‘passive’ suggestion, instead of trying to generate a description or get in the way of the user about the final alt-text. While this can be useful in some situations, it is possible is not applicable to all domains. This is why the deployment of this add-in would need to consier a broader range of categories, both on image description types, and on the eventual training of the text similarity model (we did not train this model ourselves).

Learnings and future work: Describe what you learned and how this can be extended/ built on in the future.

Firstly, we think there is a lot of promise in the JS-API in the long run. Being able to have a common plugin experience in the web, or on a desktop is a wonderful advancement. It will significantly improve the portability of accessibility technology plugins developed. As one of our speakers mentioned, portability is important to her in being able to access and migrate their experience commonly.

Our study of Alt text best practices in the world didn’t result in any ‘cannonical’ guidance. We think there is significant opportunity for study on best practices and development of open source guides. There are communities with opinions, and effective guidance was uncovered, however we feel that academic/rigorous studies and results would improve the common understanding of what does ‘good look like’. These studies may also serve to persuade more investment in existing Alt Text UIs and prompting by showing the change in human behavior.

References And Links

Code is available on GitHub University of Washington Course Page